概要

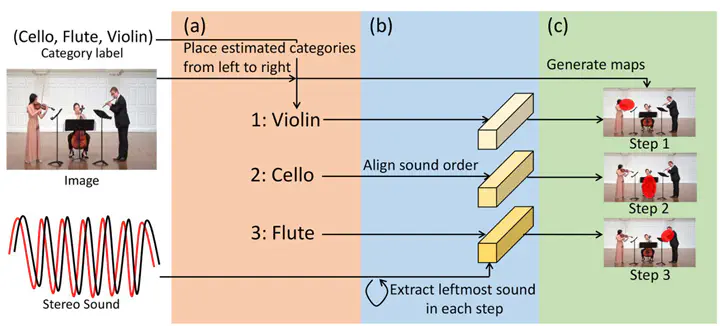

Sound localization is one of the essential tasks in audio-visual learning. Especially, stereo sound localization methods have been proposed to handle multiple sound sources. However, existing stereo-sound localization methods treat sound source localization as a segmentation task and, as a result, require costly annotation of segmentation masks. Another serious problem of the existing stereo-sound localization methods is that they have been trained and evaluated only in a controlled environment, such as a fixed camera and microphone setting with limited variability of scenes. Therefore, their performance on videos recorded in uncontrolled environments, such as in-the-wild videos from the Internet, has not been fully investigated. To address these problems, we propose a weakly supervised method as an extension of a typical stereo-sound localization method by utilizing the spatial relative order of sound sources in recorded videos. The proposed method solves the annotation problem by training the localization model using only sound category labels. Furthermore, our method utilizes the spatial relative order of the sound sources, which is not affected by specific recording settings, and thus can be effectively used for videos recorded in uncontrolled environments. We also collect stereo-recorded videos from YouTube to construct a new dataset to demonstrate the applicability of the proposed method to stereo sounds recorded in various environments. Our method enhances the localization performance by inserting a novel training step that exploits the relative order of sound sources into a typical audio-visual localization method in both existing and newly introduced audio-visual datasets.