Learning-by-Generation: Enhancing Gaze Estimation via Controllable Generative Data and Two-Stage Training

Abstract

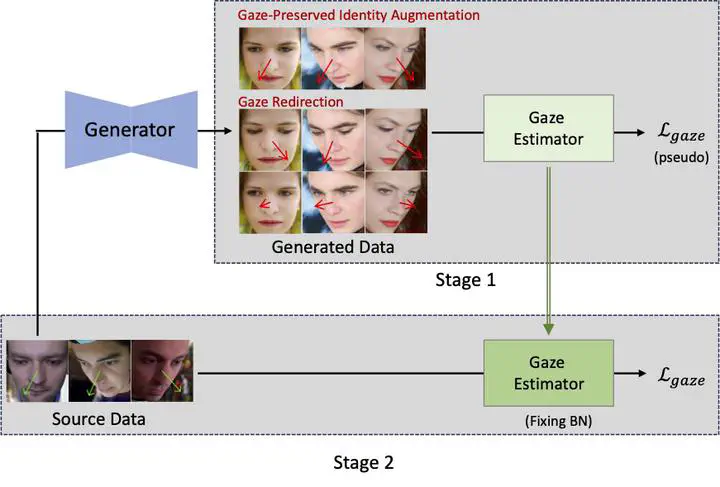

Generalization to unseen environments is crucial in appearance-based gaze estimation but is primarily hindered by limitations in appearance diversity and gaze range. However, collecting large-scale real data remains challenging due to the inefficiency of physically capturing 3D gaze directions. In this paper, we present a novel fully learning-by-generation pipeline that relies exclusively on synthetic data. While prior synthetic data has shown promise in extending gaze ranges, its limited ability to control appearance still leads to suboptimal performance in unknown environments. Our approach, based on a generative adversarial network (GAN), enables more flexible data synthesis, simultaneously addressing both gaze range and appearance diversity. Furthermore, to effectively leverage synthetic data for improved model generalization, we introduce a two-stage training pipeline that mitigates domain shifts and pseudo-label noise. Extensive experiments and analyses demonstrate that the proposed learning-by-generation pipeline enhances robustness across various scenarios, making it valuable for both data augmentation and pre-training in transfer learning.