Abstract

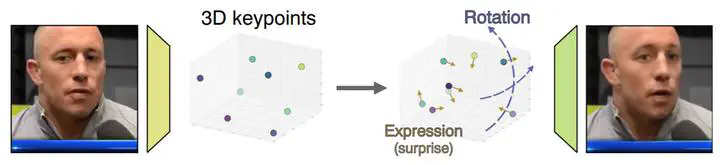

In this work, we present a novel method for simultaneously controlling the head pose and the facial expressions of a given input image using a 3D keypoint-based GAN. Existing methods for controlling head pose and expressions simultaneously are not suitable for real images, or they generate unnatural results because it is not trivial to capture head pose (large changes) and expressions (small changes) simultaneously. In this work, we achieve simultaneous control of head pose and facial expressions by introducing 3D facial keypoints for GAN-based facial image synthesis, unlike the existing 2D landmark-based approach. As a result, our method can handle both large variations due to different head poses and subtle variations due to changing facial expressions faithfully. Furthermore, our model takes audio input as an additional modality for further enhancing the quality of generated images. Our model was evaluated on the VoxCeleb2 dataset to demonstrate its state-of-the-art performance for both facial reenactment and facial image manipulation tasks, and our model tends not to be affected by the driving images.