Abstract

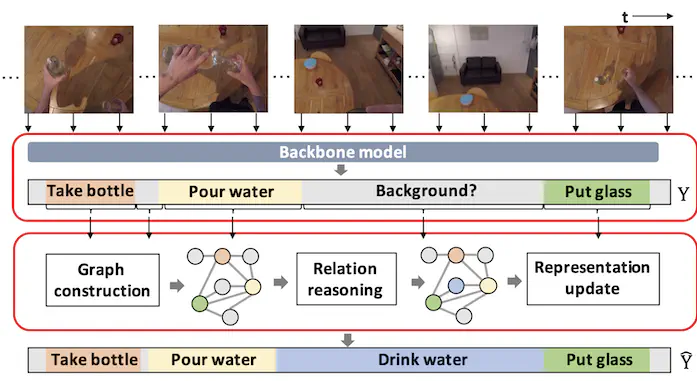

Temporal relations among multiple action segments play an important role in action segmentation especially when observations are limited (e.g., actions are occluded by other objects or happen outside a field of view). In this paper, we propose a network module called Graph-based TemporalReasoning Module (GTRM) that can be built on top of existing action segmentation models to learn the relation of multiple action segments in various time spans. We model the relations by using two Graph Convolution Networks(GCNs) where each node represents an action segment. The two graphs have different edge properties to account for boundary regression and classification tasks, respectively.By applying graph convolution, we can update each node’s representation based on its relation with neighboring nodes.The updated representation is then used for improved action segmentation. We evaluate our model on the challenging egocentric datasets namely EGTEA and EPIC-Kitchens,where actions may be partially observed due to the view-point restriction. The results show that our proposed GTRMoutperforms state-of-the-art action segmentation models by a large margin. We also demonstrate the effectiveness of our model on two third-person video datasets, the 50Saladsdataset and the Breakfast dataset.