Abstract

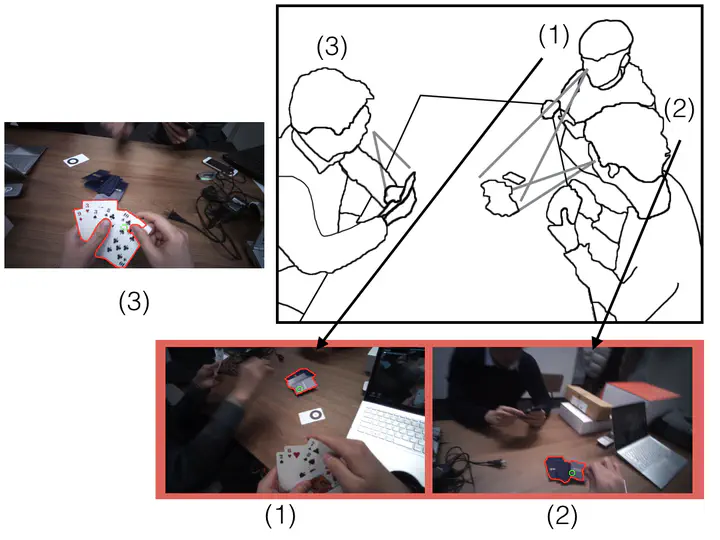

Joint attention often happens during social interactions, in which individuals share focus on the same object. This work proposes an egocentric vision-based system (ego-vision system) that aims to discover the objects looked at jointly by a group of persons engaged in interactive activities. The proposed system relies on a collection of wearable eye-tracking cameras that provide an egocentric view of the interaction scenes as well as points-of-gaze measurement of each participant. Technically in our system, we develop a hierarchical conditional random field (CRF) based graphical model that can 1) temporally localize joint attention periods and 2) spatially segment objects of joint attention. By solving these two coupled tasks together in an iterative optimization procedure, we show that human joint attention can be reliably discovered from videos even with cluttered background and noisy gaze measurement. A new dataset of joint attention is collected and annotated for evaluating the two tasks of joint attention where 2 to 4 persons are involved. Experimental results demonstrate that our approach achieves state-of-the-art performance on both tasks of spatial segmentation and temporal localization of joint attention.