Assisting group activity analysis through hand detection and identification in multiple egocentric videos

Abstract

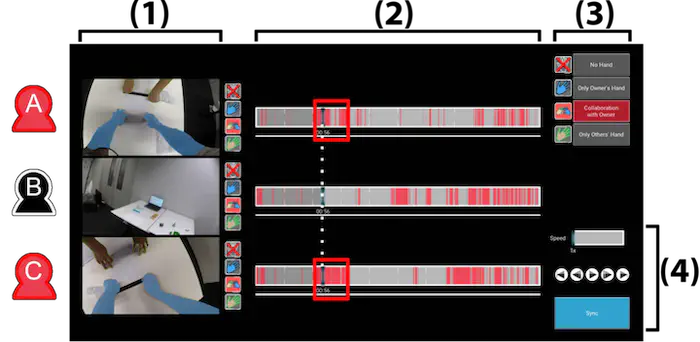

Research in group activity analysis has put attention to monitor the work and evaluate group and individual performance, which can be reflected towards potential improvements in future group interactions. As a new means to examine individual or joint actions in the group activity, our work investigates the potential of detecting and disambiguating hands of each person in first-person points-of-view videos. Based on the recent developments in automated hand-region extraction from videos, we develop a new multiple-egocentric-video browsing interface that gives easy access to the frames of 1) individual action when only the hands of the viewer are detected, 2) joint action when collective hands are detected, and 3) the viewer checking the others’ action as only their hands are detected. We take the evaluation process to explore the effectiveness of our interface with proposed hand-related features which can help perceive actions of interests in the complex analysis of videos involving co-occurred behaviors of multiple people.